Gemini – Google’s answer to ChatGPT was recently released. It comes in three different model sizes: Nano, Pro and Ultra. Currently the Pixel 8 Pro phone uses Nano, and the Google Bard chatbot uses Pro. Although Ultra, which has a comparable size to GPT-4, is pegged for release next year after safety checks have been completed.

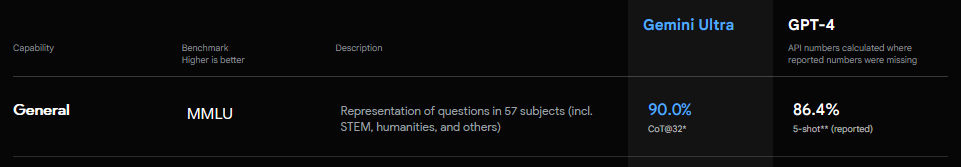

Some impressive claims were made for the Ultra variant, such as being the first model that outperforms human experts on Massive Multitask Language Understanding (MMLU) – a benchmark for measuring how good a model learns during pretraining.

Comparisons were made against the current industry leader when it comes to Large Language Models, GPT-4. With Google claiming that Gemini comes out ahead in areas such as general knowledge, reasoning, math and code.

But the grand reveal was not without controversy. It was revealed after that the impressive demo of Gemini recognising a blue rubber duck as it was being drawn, was actually faked. The responses from the AI were actually generated beforehand by feeding it still image frames and text prompts.

The Google blog post also claimed that Gemini scored 90.4% vs 86.4% for GPT-4 on the MMLU benchmark. But this was done using different prompt settings, CoT@32 and 5-shot respectively. Where CoT@32 uses 32 samples, but 5-shot uses just 5.

When Gemini was also evaluated using 5-shot its score dropped down to 83.7%, putting it behind GPT-4’s performance! This may not sound like much, but when working at these levels of accuracy, even a partial percentage change can be seen as a huge difference.

Whether Gemini actually beats GPT-4 in the latest AI wars for the best LLM remains to be seen. As the best measure is more qualitative, and based on how useful it actually is for our day-to-day queries. Hopefully we will gain access to it next year to find out for ourselves.

One Comment